What I Learned: Navigating the AI Landscape - Upskilling Your Workforce for a Safer Future

AI offers incredible potential. But business owners also need to consider the risks.

Last week, I had the pleasure of attending my firm’s FY23 annual assurance event. As anticipated, it was an engaging experience filled with workshops, keynote speakers, and yes, karaoke. One emerging theme that particularly caught my attention was the discussion of large language models (LLMs) like ChatGPT. Like many other organizations today, my firm recognizes the immense opportunities these AI tools offer and is investing significantly in harnessing their capabilities.

However, despite the enthralling potential and staggering investments, I believe many businesses remain underprepared for the inevitable challenge of training their employees for effective, secure, and safe usage of these AI tools—especially when handling a large amount of sensitive data.

Proper training and risk awareness regarding AI tools will be non-negotiable for any company aiming to gain a competitive advantage without exposing itself to unnecessary risk. Upskilling your workforce is essential to both identifying and rectifying AI-induced mistakes and optimally utilizing these tools to minimize costly errors.

In this article, I aim to explore some of the most significant risks businesses may face during the integration of AI into their workflow and propose viable solutions to mitigate these risks.

Key Risks

1. Security and Privacy Concerns

Data security and privacy are not new to the digital conversation—they have remained at the forefront for a good reason. As a business owner, if you are considering integrating AI, data security should be your top priority.

Be vigilant with your data: Be extremely cautious with your company's data and even more so with your customer's data. Poor data handling practices can result in data breaches, loss of customer trust, reputation damage, legal litigation, and regulatory infringements—all of which can severely harm your business.

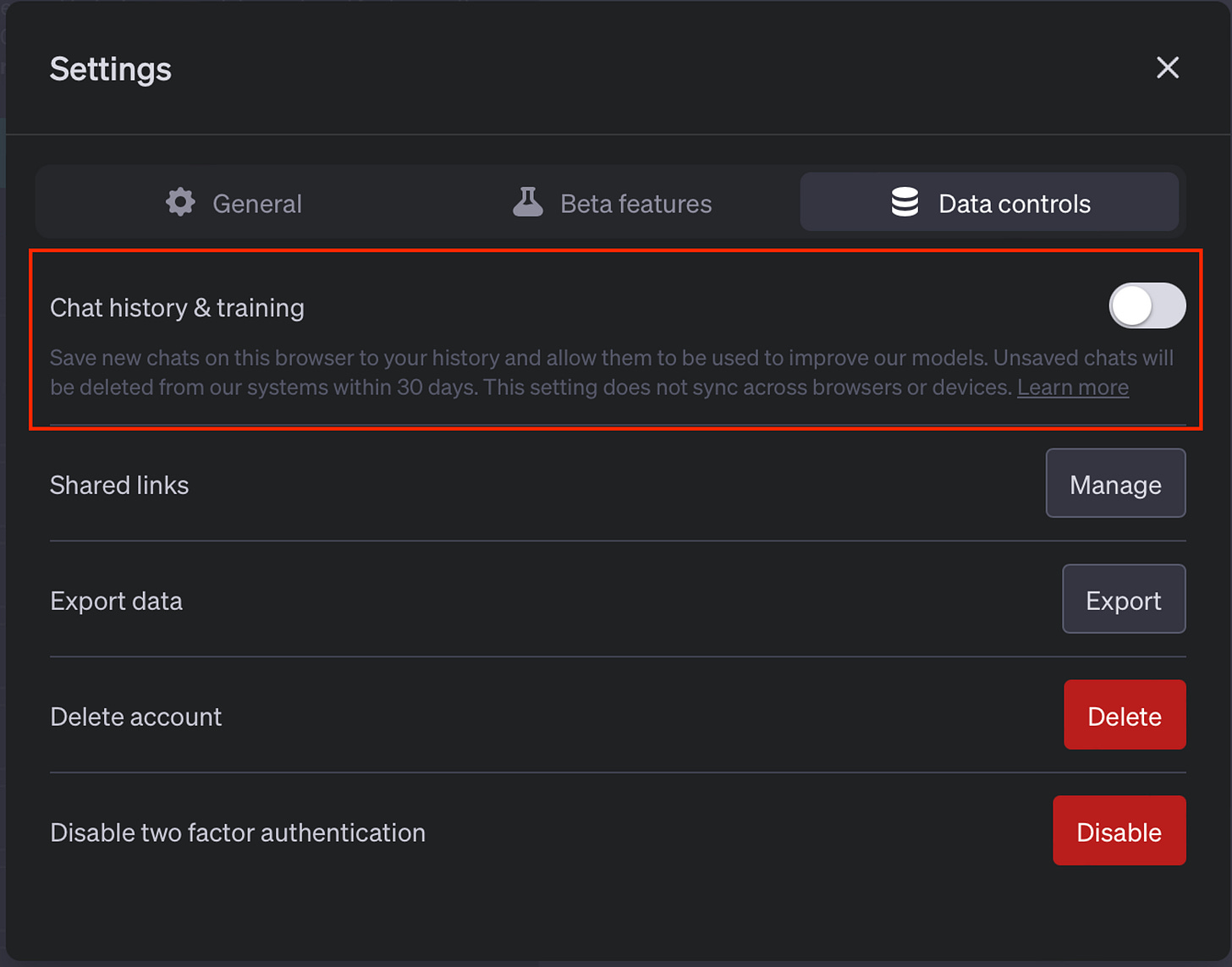

Understanding UI and settings: The data you provide to the LLMs like OpenAI's ChatGPT is collected and used. While OpenAI has mentioned an upcoming business model offering better data protection, the specifics are still undisclosed, and the offer is not currently available. Therefore, before deploying ChatGPT within your organization, ensure your employees understand the potential risks associated with incorrect usage. Here are some precautionary measures to take:

Turn off Chat History & Training: This action prevents your information from being incorporated into the model—eliminating the risk of inadvertently leaking sensitive or confidential data.

Use the data controls form: If you need to keep the chat history on but want to prevent data storage for training purposes, consider using the form available at OpenAI's Data Controls FAQ.

Anonymize your data: Remove any identifying information from your prompts. For instance, instead of inputting a specific name in an email, use placeholders like “Dear [NAME]”.

Remember, while ChatGPT can bring numerous benefits to your business, you are ultimately responsible for protecting your data and your client's data.

2. Verification of Generated Information

ChatGPT has proven to be a groundbreaking tool for research and learning. However, these models are known to "hallucinate" occasionally—generating convincing, yet entirely fictional information. Here are a few best practices to counteract this:

Work with the AI, not through it: The more precise the information you provide to the LLM, the better it can generate the desired response.

Utilize best practice prompting: Structuring prompts correctly, such as giving GPT a specific role or adding "let’s work this out step-by-step to ensure we have the right response", significantly improves the model's accuracy. Other more complicated, but even more effective strategies like the “Tree-of-thought” method exist.

Always double-check: Ensure that the AI-generated information aligns with other sources before

making any important decisions based on it.

3. Over-reliance on AI & The Decline of Critical Thinking

As AI tools like ChatGPT become more reliable, there's a looming risk of over-reliance, leading to a decline in critical thinking among employees. To combat this:

Foster a culture of accountability: Remind your employees frequently that they are responsible for their output, regardless of whether it's AI-generated or not.

Incorporate higher-level management review: Managers should critically review the quality of AI-generated reports or other outputs.

Implement internal audits: Engaging a third-party to perform regular quality checks on AI-generated content can be invaluable in identifying and correcting errors before any critical decisions are made.

Conclusion

The integration of AI tools like ChatGPT into your business workflow can offer incredible benefits, from automation to efficient data processing. However, to truly harness the potential of AI without compromising security and accuracy, it is essential to upskill your workforce and instill a culture of accountability and vigilance. By recognizing and proactively managing the associated risks, your business can sail smoothly through the exciting waters of AI-driven innovation.