What I Learned: How to make an LLM, and why Nvidia is winning

What I learned from a brilliant video by Andrej Karpathy and why Nvidia is making people rich.

Hi everyone,

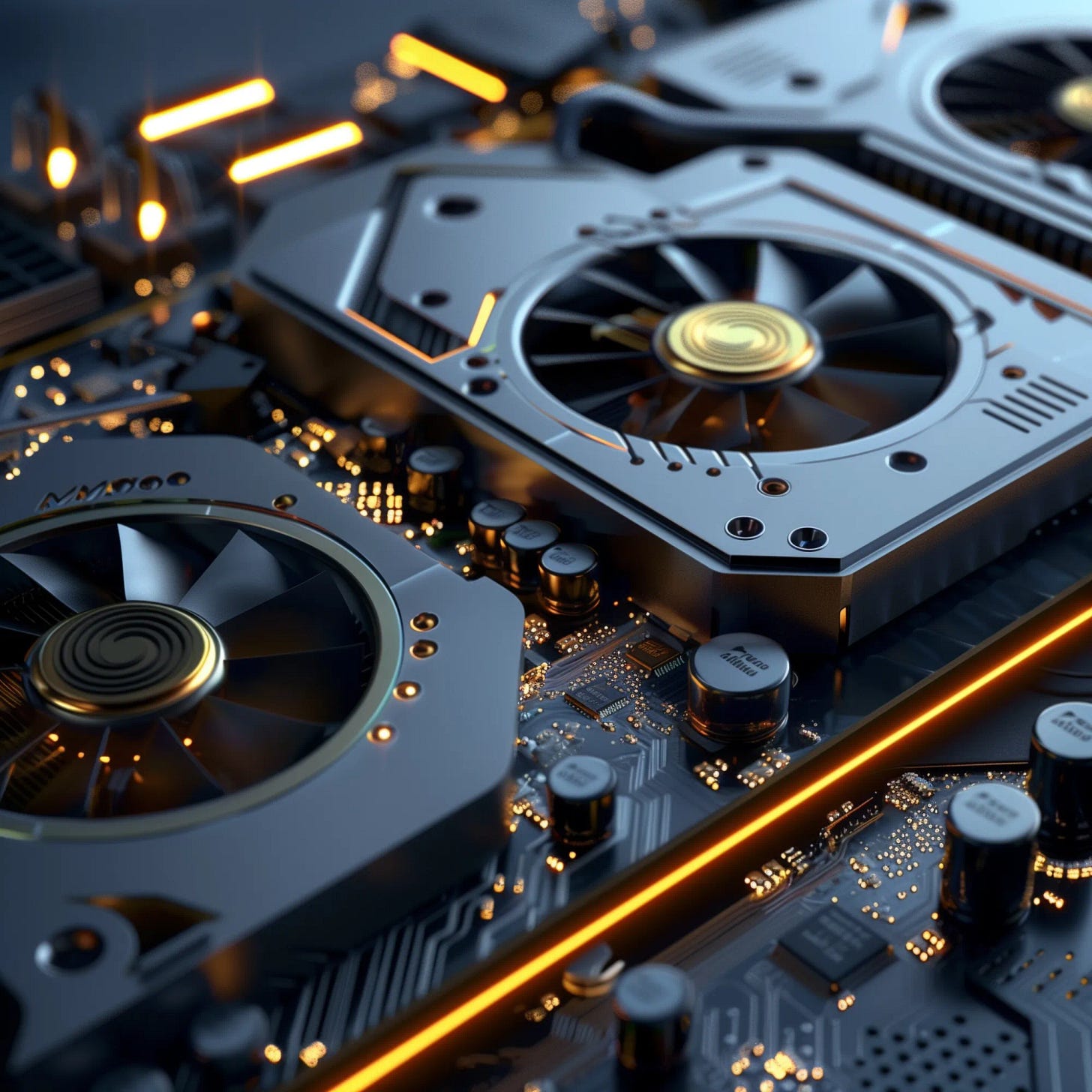

I recently came across an incredibly informative YouTube video by Andrej Karpathy, which provides an introductory overview of large language models (LLMs). Not only was the video exceptionally informative, but it also offered great insight into why companies like Nvidia have seen their earnings and stock prices surge due to the demand for their GPUs. Here's what I learned:

Who is Andrej Karpathy?

Andrej Karpathy is a Slovak-Canadian AI researcher originally from Slovakia, known for his significant contributions to Tesla and OpenAI. At Tesla, he led the development of self-driving and Autopilot technologies, and at OpenAI, he was instrumental in the creation of ChatGPT. Karpathy earned his Bachelor’s degree from the University of Toronto, his Master’s from the University of British Columbia, and his PhD from Stanford. He recently made headlines by stepping back from OpenAI to focus on educational content through his YouTube channel.

The Anatomy of an LLM:

A basic LLM consists of just two components: a massive parameters file created through extensive "training," and a small program file that executes the parameters. The parameters file, generated in two key steps, is where the magic happens.

Step 1: Pre-training

This stage begins with the collection of a vast amount of text data from the internet. For context, GPT-3, OpenAI's previous generation LLM, was trained on 45TB of text data, equivalent to the word count of approximately 90,000,000 full-length novels. Subsequent models like GPT-4 are likely at least 10-100 times larger.

The data is then processed through a massive compression algorithm, resulting in a crude version of the large parameter file. It not a perfect representation of all the text data, but basically contains the gist of everything that it was trained on.

However, initially, the model isn’t very useful. It doesn’t answer questions effectively, and when prompted just spouts information, code, or more questions in response. In essence, the model is just “dreaming” webpages that it has seen before, like a slightly-off Amazon product page, or something that seems like a wikipedia article.

This step is by-far the most expensive step in the process. It requires thousands of powerful state-of-the-art computers to run for multiple days while all that data is compressed into the parameter file. Llama-2, Meta’s current open source model, for example, was trained on 2,048 Nvidia A100 GPUs for 23 days and cost around $1.7m. However, Nvidia recently announced a newer model of GPU called the H100 that is roughly 5-10x more powerful. Meta has already pre-purchased 350,000 of them for around $30,000 each.

So, while it’s doubtful that all the GPUs will be used to train a single model, the AI compute power available to Meta is likely to increase by a multiple of over 1,000. And, while Llama-2 is already one of the most powerful LLM models available, it’s fair to say the next generation of models trained on the H100s is going to represent a substantial improvement.

Furthermore, Meta is of course not the company making these kind of investments. All the large technology companies are making massive investments into the space and the competition for supremacy in terms of accuracy, speed, and cost of use is likely to push the capabilities of these AI tools to incredible heights.

Step 2: Fine-tuning

Fine-tuning is where the LLM actually becomes useful. The goal of this stage is to take the crude model, and teach it how to answer questions. In the case of LLMs, this is often to act like an assistant. You ask it a question and it provides a response.

AI researchers refine the model using high-quality Q&A pairs from real conversations. So while it remains expensive to collect there Q&A pairs from real humans, the computational power required to continue training the model on the data is much lower. In fact, the cost of this stage of the training is expected to continue to decrease as the process becomes increasing automated. For example, after performing enough manual training that the LLM can provide a quality response, you can instead shift to asking to provide two answers and just asking humans to select the better one. This is not only easier for people than coming up with high quality answers, but can actually be tasked to another AI model, thus the AI begins to improve itself.

Scaling Laws & What Comes Next

According to Karpathy, the performance of LLMs is predictably influenced by two key factors:

The number of parameters in the network and,

The amount of data that the model is trained on.

Most importantly, there is no signs of diminishing returns.

This is why there is such a huge demand spike for extremely powerful computers, such as what Nvidia is about to produce. Despite how impressive GPT-4 is, state-of-the-art models have an immeasurable amount of room for improvement. And, that improvement can be achieved relatively easily by just adding more computing power to the technology. Basically, we have a potentially world changing technology whose only limiting factor is the amount of money thrown at it in the short-term.

Conclusion

Overall, I found the video exceptionally enlightening, offering a comprehensive understanding of both the technology behind LLMs and the business dynamics driving the demand for AI-focused GPUs. I highly recommend watching the full video to anyone interested in the technology.